Rubrik : Archiving on Google Cloud Platform (GCP)

Your Rubrik platform is getting full and you are low on CAPEX. However, you have plenty of OPEX, then cloud is yours. Setting up archiving in the cloud could be complex process. I'm actually quite new to this area so I will try to explain you how to use it

Google is your friend!

Most of the time yes. But when it comes to configuring buckets and object store archiving it could be something else than a friend.

Actions on the GCP Side

Firstly, you need to prepare the work on the GCP side (from the Google Cloud Console). Go to the Storage section and create a new bucket :

Note : the name must be unique within the entire GCP infrastructure, so be very specific to your own need to avoid any "name already in use". About the bucket location, I recommend to use a Google datacenter as close as possible from your Rubrik infrastructure. That will drastically improve data transfer performance. For storage class, Google recommend to chose Nearline for backup archives.

Create a service account and ask Google to generate a key for it in JSON format.

{

"type": "service_account",

"project_id": "xxxxxxxxxxxxxxxx",

"private_key_id": "f9e95990cdxxxxxxxxxxxxxxe4fbc00ee",

"private_key": "-----BEGIN PRIVATE KEY-----\nMIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQCqmG1qOgEBCm+B\no3/JhxOe71aq7qz86JnvqsY1NU/kyFp7mTEcbpDtsUQoaVP52igPka8wHX1JYjom\nI7l/omvA5oYB8v6XA4PPp65IxzjxUPD5BnVCqiXYsVKG1sZHxoGvZ4uo8gJW19FU\njPDtoC9RLdYHdJR7KQZIkn2zb2es7A28/2flLM2Wc8d4659+QXvzDj1fav5Vd/YV\nOjbcEGxCDWsorWlYBre96TyoD7yhkq2zm4NE1YGZ6732+3e7lrlYxMiTvsuYp/v0\nQJlwgn8et2n6MZpYyYs/RDZbrW65tPWPe7CRE18oNsYc1xGp0Ee/Iqh7XhV70ykG\nqhu+C3xNAgMBAAECggEAI12UpzYtoZvaeG9e7TYnuuXmm2p96bNFwFHwo7Sw4p6n\nZWKhdAG24VPDq7YELvKLJgkfXpPqdnVa1S/IQ522BWg0xUAtB7z2jSy3nIZSd3J+\ndj2gvwh7bpP6JhJtyhDsryKB6Sz8j6jPkF3kj3qK/KMKMQL5yht73j0iOnngrg/t\nJK/i757UiVKx+rZ+KdeOd1S/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxKjHIixn5vky36OsuX4FXdIJx8K\nKhJj/l9u0x+MMAuOYSc41bdu9CDjh6Zo+65Rfr3BAoGBAOtgpfNiztQLviLbNHJw\nyb6Jb/s6ooMfZG4JfoNPnauPu/TjqFQI3qbWM8TMbR9P8dMNJ7dtjIozYirecbNl\nyulODFhlwyxVW7tF12GerexrS4A+Y7vkmKbZsvQL5IJfbx/xS1hJBLsd52JzYlcM\nTGYDG0rt5mc62FZxv0JssJfZ\n-----END PRIVATE KEY-----\n",

"client_email": "rubrik-test-archive@xxxxxxxxxxxx.iam.gserviceaccount.com",

"client_id": "10480000000000002470",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/rubrik-test-archive%40xxxxxxxxxxx.iam.gserviceaccount.com"

}

Go back to the previously created bucket and edit the permissions, add a new member, type the service account we just created in the New Member section and assign it the Storage Admin rights :

Actions on the Rubrik Side

Next step is on the Rubrik CDM side. Click on the gear icon, Archival Location and plus sign to reach this screen :

Few remarks here, the encryption password is something you chose, ideally must be complex and will be used to encrypt the data written on GCP (on the fly).

The Service Account JSON key is the one you have secured above when generating the key.

Press add.

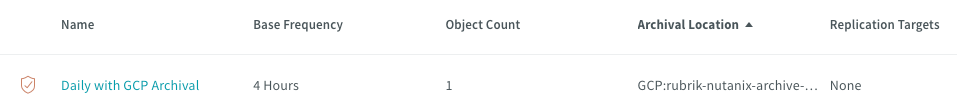

You should see a screen similar to this :

At this stage, the baseline is ready and we can use it.

Create a SLA as usual and in the second screen configure the following :

Now, you can assign objects to this SLA and the archival will kick in!

Now, back on the GCP side, you can see some files coming in the bucket :

Using the Cloud Console from the GCP interface, you can issue some commands to see the usage of the bucket :

Both on-prem and in the cloud

Local only

Be careful while playing with archives, the cost could be surprising in some cases, so be sure to check/understand what you are doing. As a matter of best practice, I would recommend to have a on-prem buffer with some sort of cheap S3-like storage before sending in the cloud for longer term retention rather than pushing your archives directly on public cloud providers.

Usually, they are proposing a cost simulator that can be very useful. Here is a sample with GCP for 10 TB with some retrieval operations :

Sample class A operations

Create buckets; upload objects; set bucket permissions; delete object permissions

Sample class B operations

Download objects; view metadata; retrieve bucket and object permissions

Final note : as of now (Dec 2020) there is no way to move archived data from one location to another. Rubrik is considering this for the future.

I hope this guide will help others struggling to configure archiving in the cloud.

That's it for today ;)

Comments

Post a Comment

Thank you for your message, it has been sent to the moderator for review...